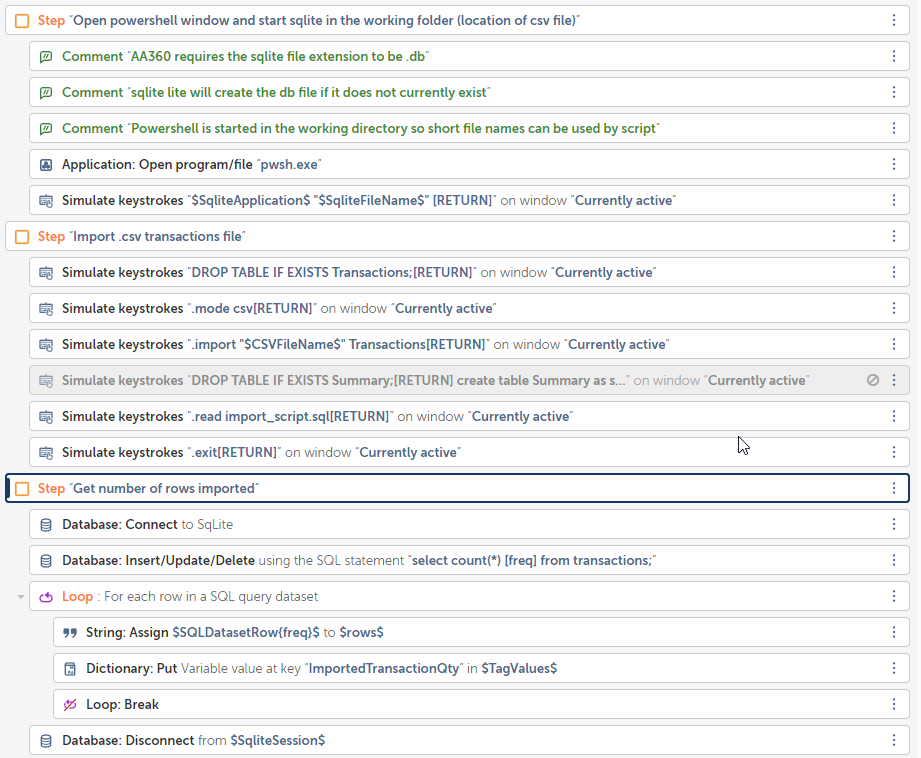

Currently I'm building a bot that handles large amounts of data. My bot ends up timing out when it runs the 'CSV/TXT: Read' action. When I read a similar file with 20,000 rows and 12 columns it is able to read it within a minute.

Is the problem I'm having due to the limitations of the data table variable? Or is the problem have to do with the CSV file. If it is the CSV file, does anyone know a good workaround to being able to read such a huge file?

Thank you in advance!